Data Scientist with python.

Some key topics in Python for data scientists:

1. Python Fundamentals

-

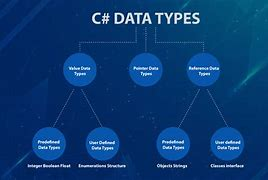

Variables, Data Types, and Operators:

- Grasping the building blocks of Python is essential for any data science project. This includes understanding how to store and manipulate different kinds of data (like numbers, text, and booleans) using variables, as well as performing operations on them using operators.

-

Control Flow and Functions:

Python provides structures likeifstatements,forloops, andwhileloops to control the flow of your code. Functions allow you to organize reusable blocks of code, promoting modularity and efficiency. -

Object-Oriented Programming (OOP):

OOP is a programming paradigm that lets you structure your code around objects that encapsulate data (attributes) and related operations (methods). This makes your code more maintainable and reusable.

2. Data Manipulation and Analysis with Pandas

-

Pandas Introduction: Pandas is a powerful library for data manipulation and analysis. It provides high-performance, easy-to-use data structures like Series (one-dimensional) and DataFrames (two-dimensional) for handling tabular data.

-

Data Cleaning and Preprocessing: Real-world data often contains inconsistencies or missing values. Pandas offers tools to clean, filter, and transform your data to prepare it for analysis.

-

Data Exploration and Analysis: You can use Pandas to perform various data analysis tasks, like calculating summary statistics, grouping data, and merging datasets.

3. Data Visualization with Matplotlib and Seaborn

-

Data Visualization Fundamentals: Data visualization is crucial for exploring data, identifying patterns, and communicating insights. Matplotlib is a fundamental library for creating various plots and charts.

-

Seaborn for Statistical Visualization: Seaborn builds on top of Matplotlib to provide a high-level interface for creating statistical graphics. It offers a wide range of built-in themes and aesthetics for creating publication-quality visualizations.

-

Creating Custom Visualizations: While libraries provide many charts, you can also customize them or create new ones using Matplotlib's object-oriented API for more tailored visualizations.

4. Machine Learning with Scikit-learn

-

Scikit-learn Introduction: Scikit-learn is a comprehensive library for machine learning, offering a wide range of algorithms for classification, regression, clustering, dimensionality reduction, and more.

-

Supervised Learning: Supervised learning involves training a model on labeled data to predict future outcomes. Common supervised learning algorithms include linear regression, decision trees, and support vector machines.

-

Unsupervised Learning: Unsupervised learning deals with unlabeled data, where the goal is to uncover hidden patterns or structures within the data. Common unsupervised learning algorithms include k-means clustering and principal component analysis (PCA).

-

Model Evaluation and Selection: After training machine learning models, it's crucial to evaluate their performance on unseen data. Scikit-learn provides metrics and tools to help you choose the best model for your task.

5. Deep Learning with TensorFlow or PyTorch

-

Deep Learning Introduction: Deep learning is a subfield of machine learning that uses artificial neural networks for complex tasks like image recognition, natural language processing, and time series forecasting.

-

TensorFlow or PyTorch: TensorFlow and PyTorch are popular deep learning frameworks that provide tools for building, training, and deploying neural networks. While both offer similar functionalities, they have slight differences in their syntax and approach.

-

Building and Training Neural Networks: Deep learning involves designing neural network architectures, defining loss functions, and using optimization algorithms to train the network on your data.

6. Data Wrangling and Storage with Libraries like NumPy

-

NumPy Introduction: NumPy is a fundamental library for numerical computing in Python. It provides efficient multi-dimensional arrays and linear algebra operations, often used as the foundation for other data science libraries.

-

Data Loading and Saving: NumPy offers functions to read data from various file formats (CSV, Excel) and save data in formats suitable for further analysis.

-

Working with Large Datasets: For very large datasets that may not fit into memory, libraries like pandas can leverage NumPy's capabilities for out-of-memory data processing.

7. Version Control with Git

-

Git Introduction: Version control is essential for tracking changes to your code and data science projects. Git is a popular version control system that allows you to collaborate effectively and revert to previous versions if needed.

-

Branching and Merging: Git allows you to create branches for working on different features or experiments in your project. You can then merge these branches back into the main codebase.

-

Version Tracking and Collaboration: Version control helps maintain a history of changes, making it easier to collaborate with others and track the evolution of your project.

Comments

Post a Comment